Research

If I had to put a label on it, I’d say my research lives under the big (and dense) umbrella of Networked Edge AI Systems. In practice, this means exploring how embedded intelligence components (from the tiniest microcontrollers to large cloud platforms) can cooperate, reason, and adapt for efficient AI services provisioning. A relatively new theme in my research activities is the automation of the Edge AI lifecycle across heterogeneous environments, making systems more dependable and less reliant on human intervention. I’m genuinely fascinated by the “plumbing” that makes all this possible: protocols, orchestration layers, and the mechanisms that keep distributed intelligence running when computing and networking resources are scarce or unpredictable. Some of my current and recent research topics are showcased below.

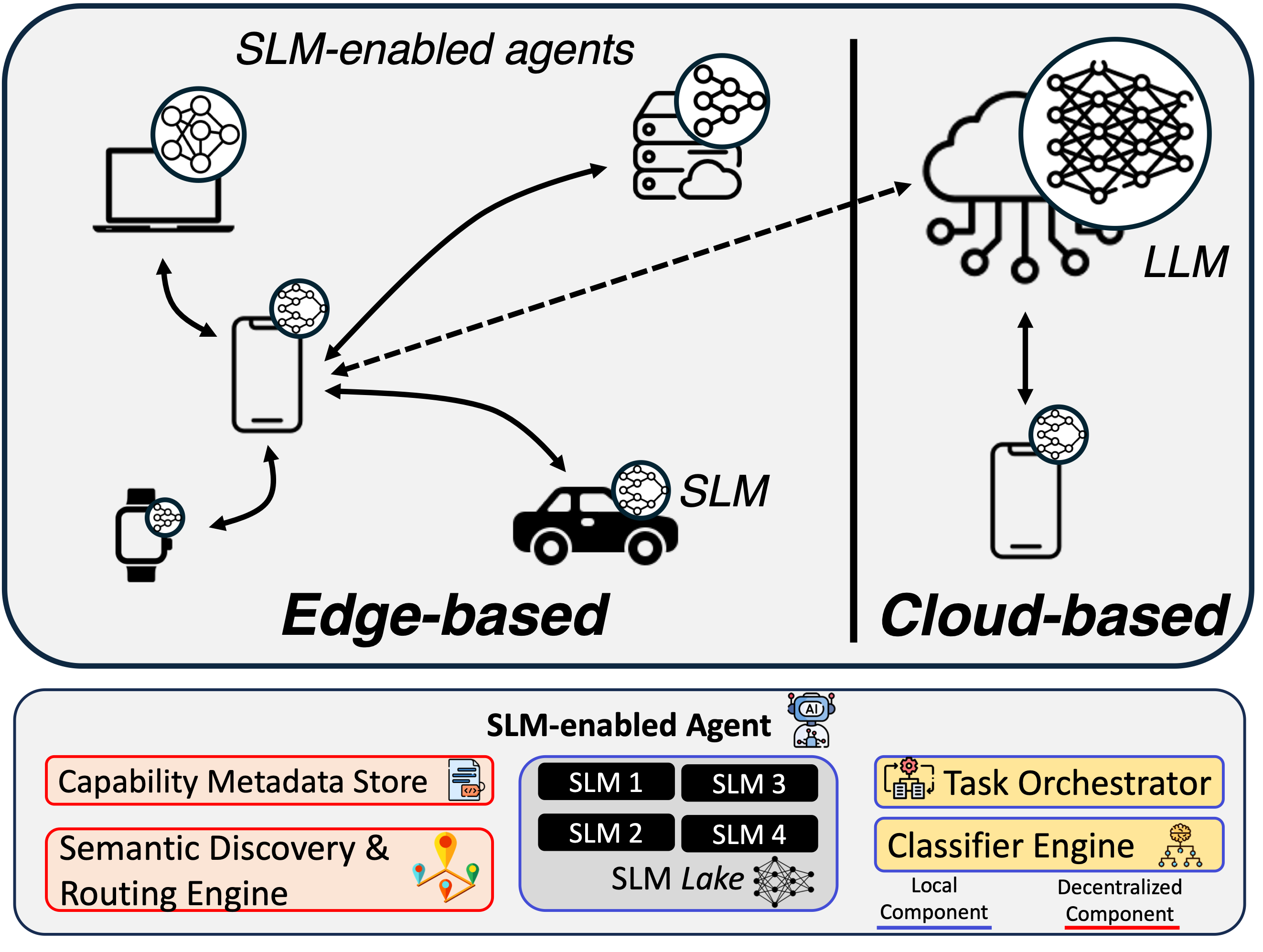

Collaborative GenAI Inference at the Edge

How devices, edge nodes, and cloud cooperate to serve LLM requests under latency, energy, and cost constraints.

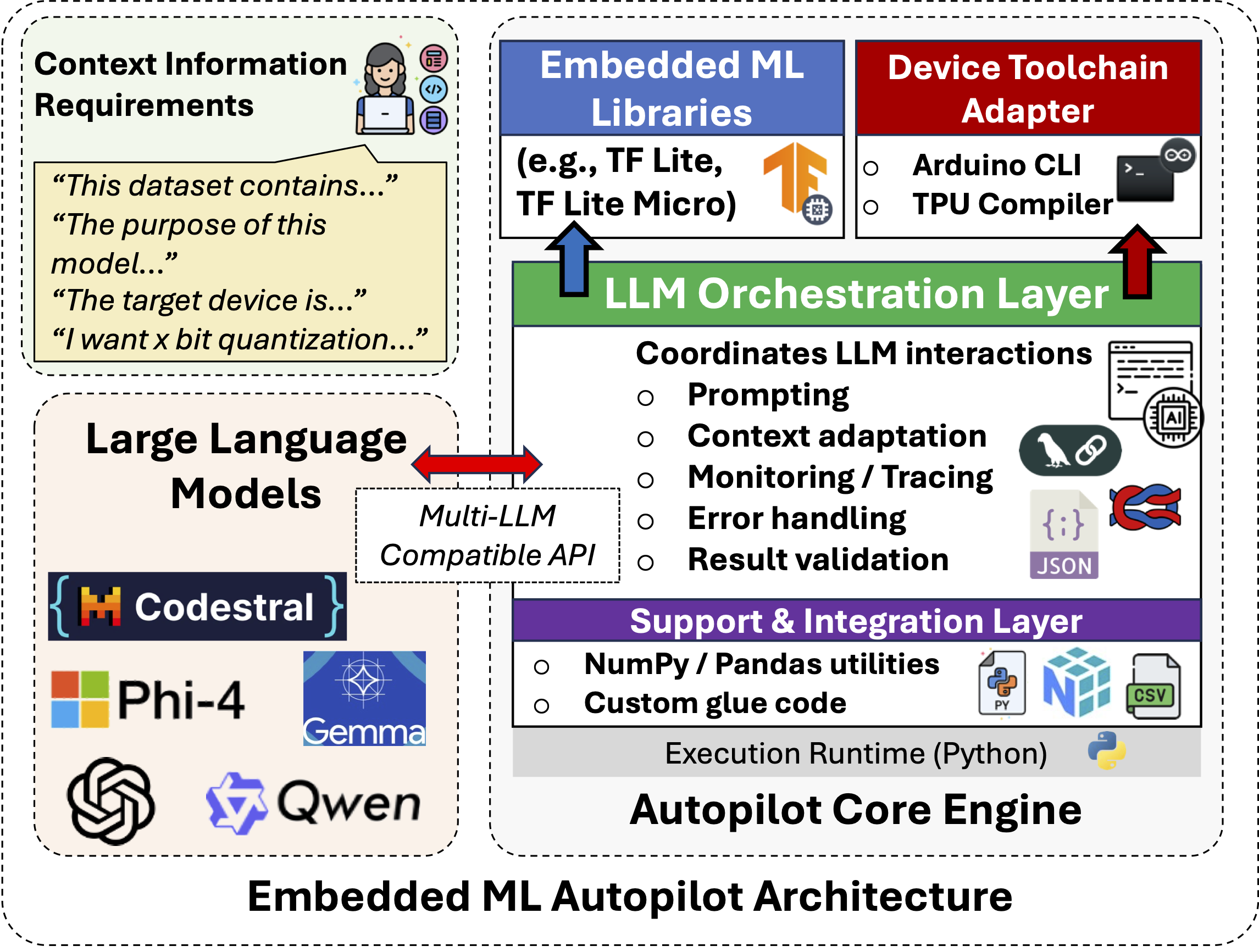

Autopilot for Edge AI Lifecycles

LLM-powered automation of data prep, conversion, quantization, and code generation for MCU-class devices.

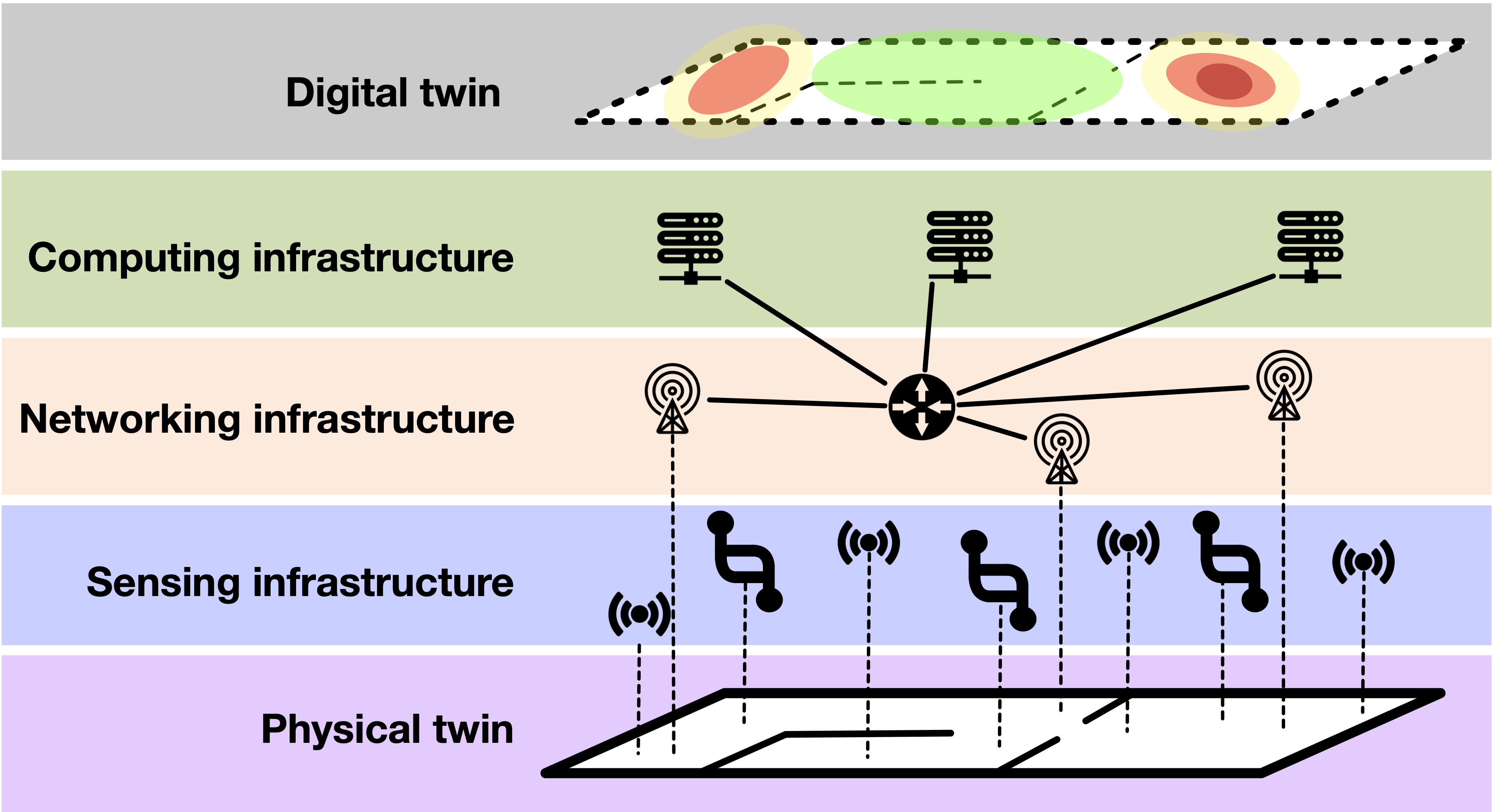

Networked Digital Twins

Real-time twins over heterogeneous access, with Generative AI for inference, analytics, and resilience.

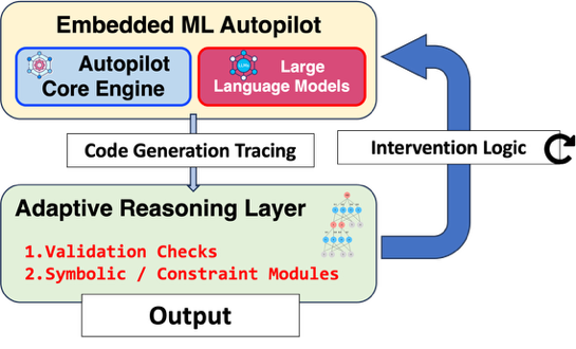

Neuro-Symbolic Reasoning for Edge AI

Combining symbolic methods with LLMs to improve planning, verification, and on-device reliability.